EgoSchema

A Diagnostic Benchmark for Very Long-form Video Language Understanding

Karttikeya Mangalam

Raiymbek Akshulakov

Jitendra Malik

Please scroll down to continue

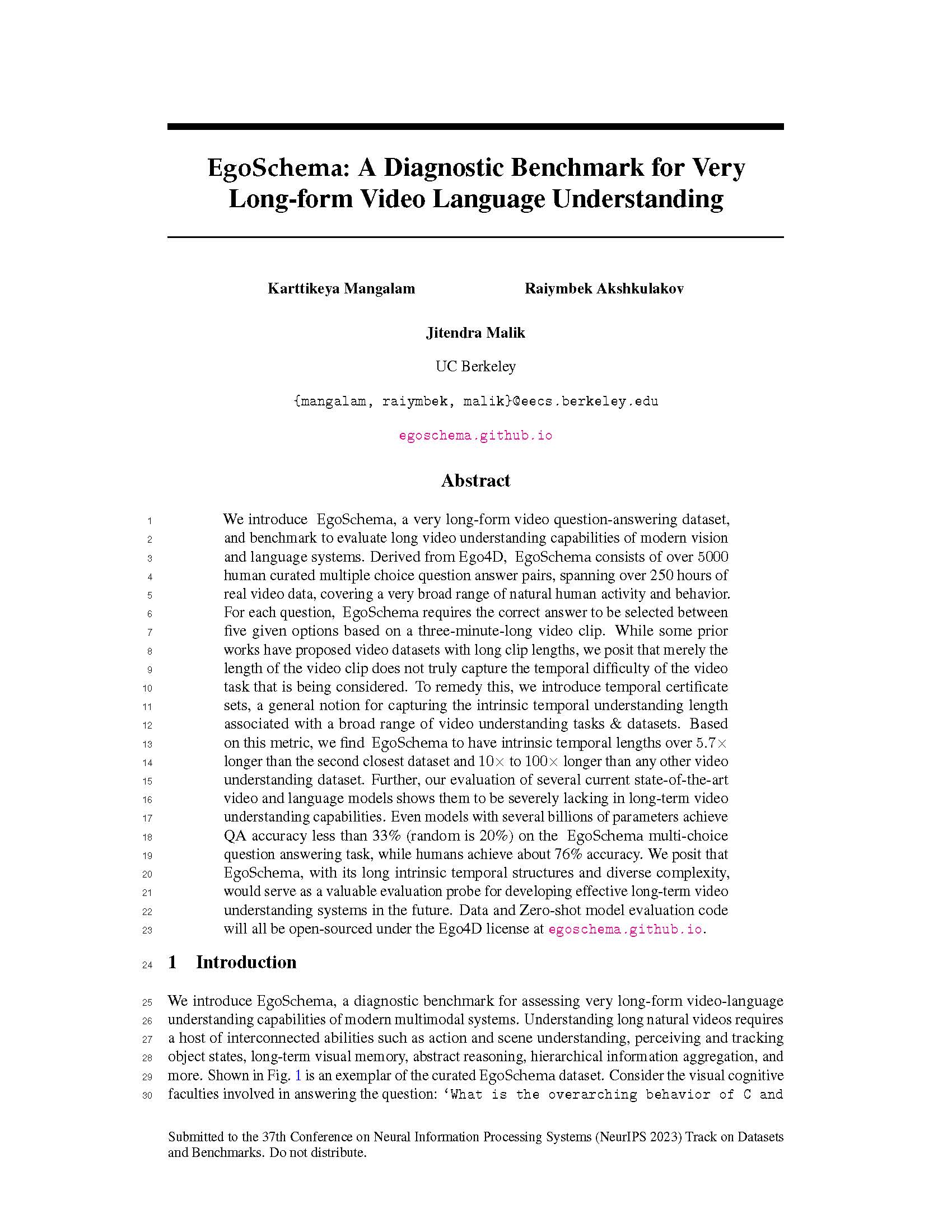

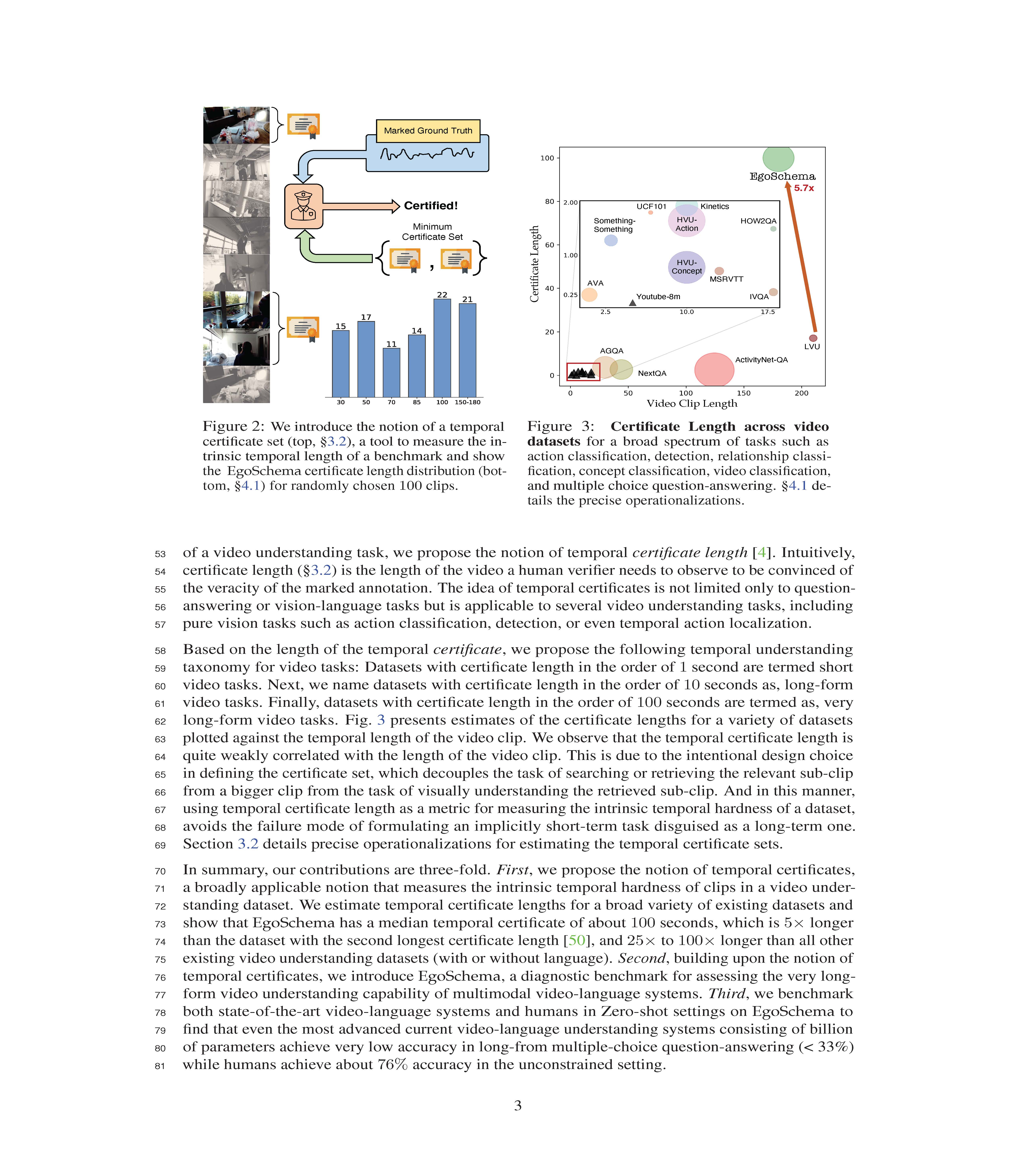

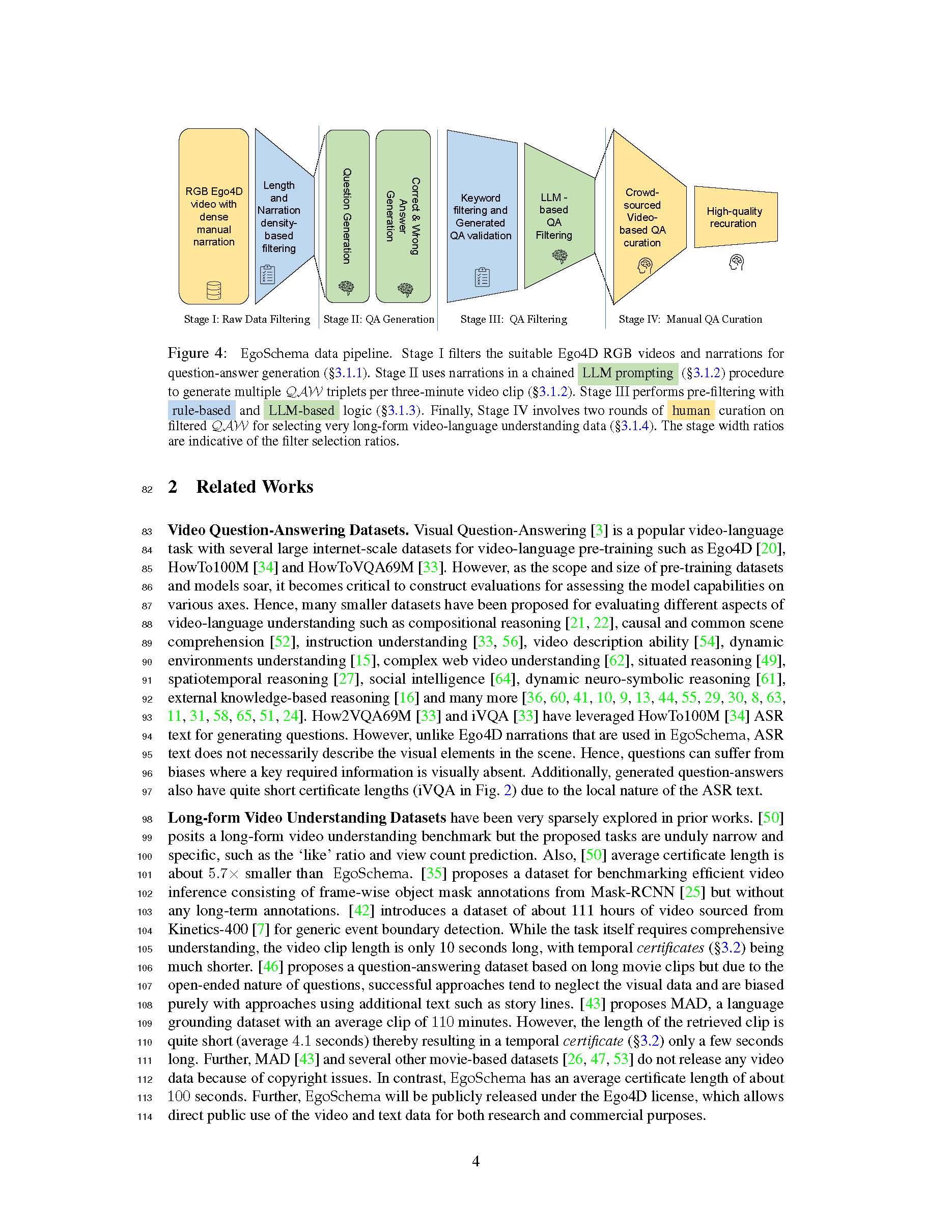

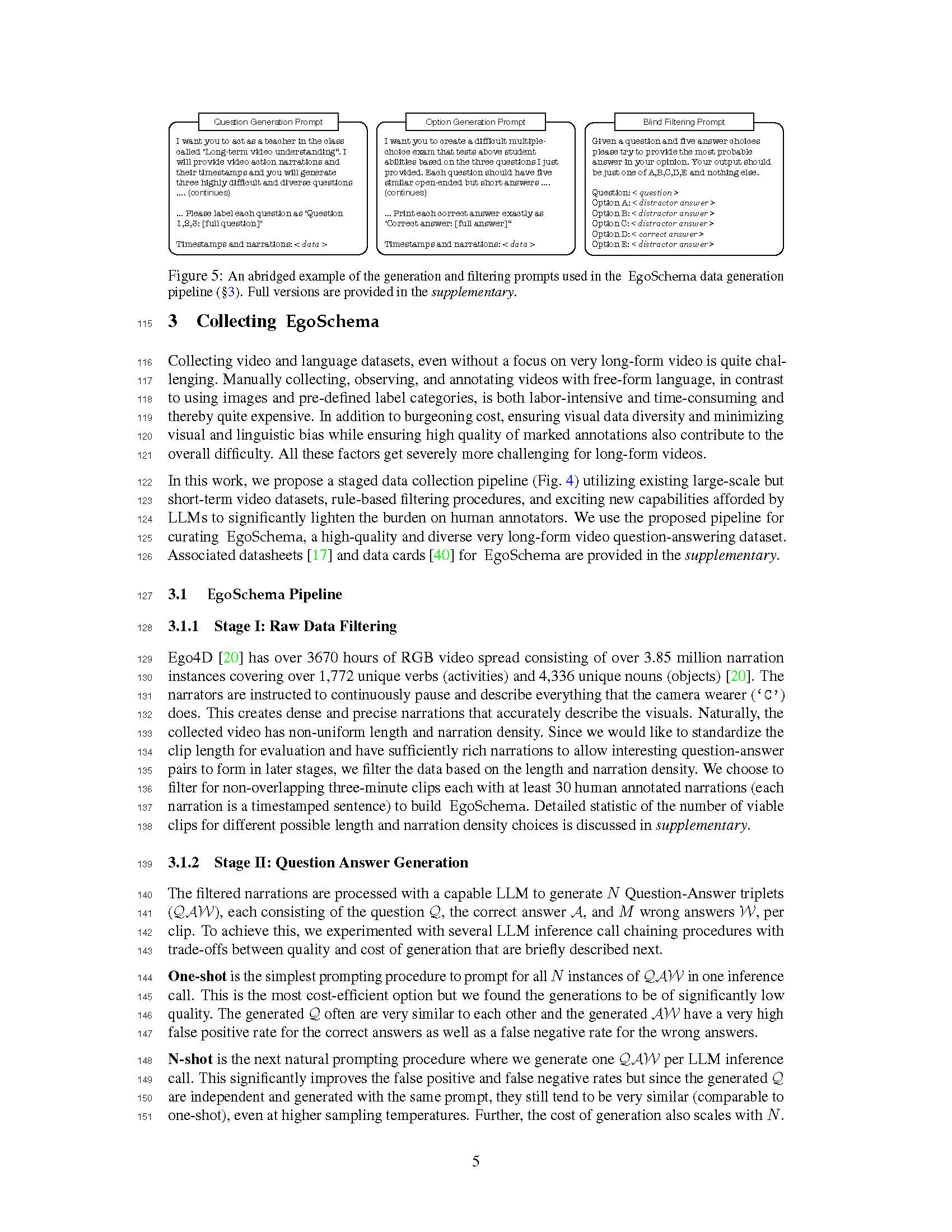

We introduce EgoSchema, a very long-form video question-answering dataset, and benchmark to evaluate long video understanding capabilities of modern vision and language systems.

Derived from Ego4D, EgoSchema consists of over 5000 human curated multiple choice question answer pairs, spanning over 250 hours of real video data, covering a very broad range of natural human activity and behavior. For each question, EgoSchema requires the correct answer to be selected between five given options based on a three-minute-long video clip.

Derived from Ego4D, EgoSchema consists of over 5000 human curated multiple choice question answer pairs, spanning over 250 hours of real video data, covering a very broad range of natural human activity and behavior. For each question, EgoSchema requires the correct answer to be selected between five given options based on a three-minute-long video clip.

Download script

You can download the dataset by running the script at the following link.Direct download

Folder with all videos is also hosted in google drive.Ego4D metadata

The file that connect EgoSchema questions to Ego4D original metadata can be found here. (in development)Benchmarking code

Comprehensive guides and setup procedures for benchmarking models from the paper can be found here. Karttikeya Mangalam

PhD Student in Computer Vision at Berkeley AI Research (BAIR)

University of California, Berkeley

Karttikeya Mangalam

PhD Student in Computer Vision at Berkeley AI Research (BAIR)

University of California, Berkeley

Raiymbek Akshulakov

Fifth Year Masters in Computer Vision at Berkeley AI Research (BAIR)

University of California, Berkeley

Raiymbek Akshulakov

Fifth Year Masters in Computer Vision at Berkeley AI Research (BAIR)

University of California, Berkeley

⇧